Wavefolding

Wavefolding¶

Wavefolding is a special case of waveshaping, working with periodic transfer functions. Depending on the pre-gain, the source signal gets folded back, once a maximum of the transfer function is reached. Compared to the previously introduced soft clipping or other methods of waveshaping, this adds many strong harmonics.

Periodic Shaping Function¶

A simple basic transfer function is a sine with the appropriate scaling factor. The pre-gain $g$ is the parameter for controling the intensity of the folding effect:

$$ y[n] = sin( g \frac{\pi}{2} x[n]) $$For an input signal $x$, limited to values between $-1$ and $1$, respectively for gains $g\leq1$, this results in a sinusoidal waveshaping function with saturation:

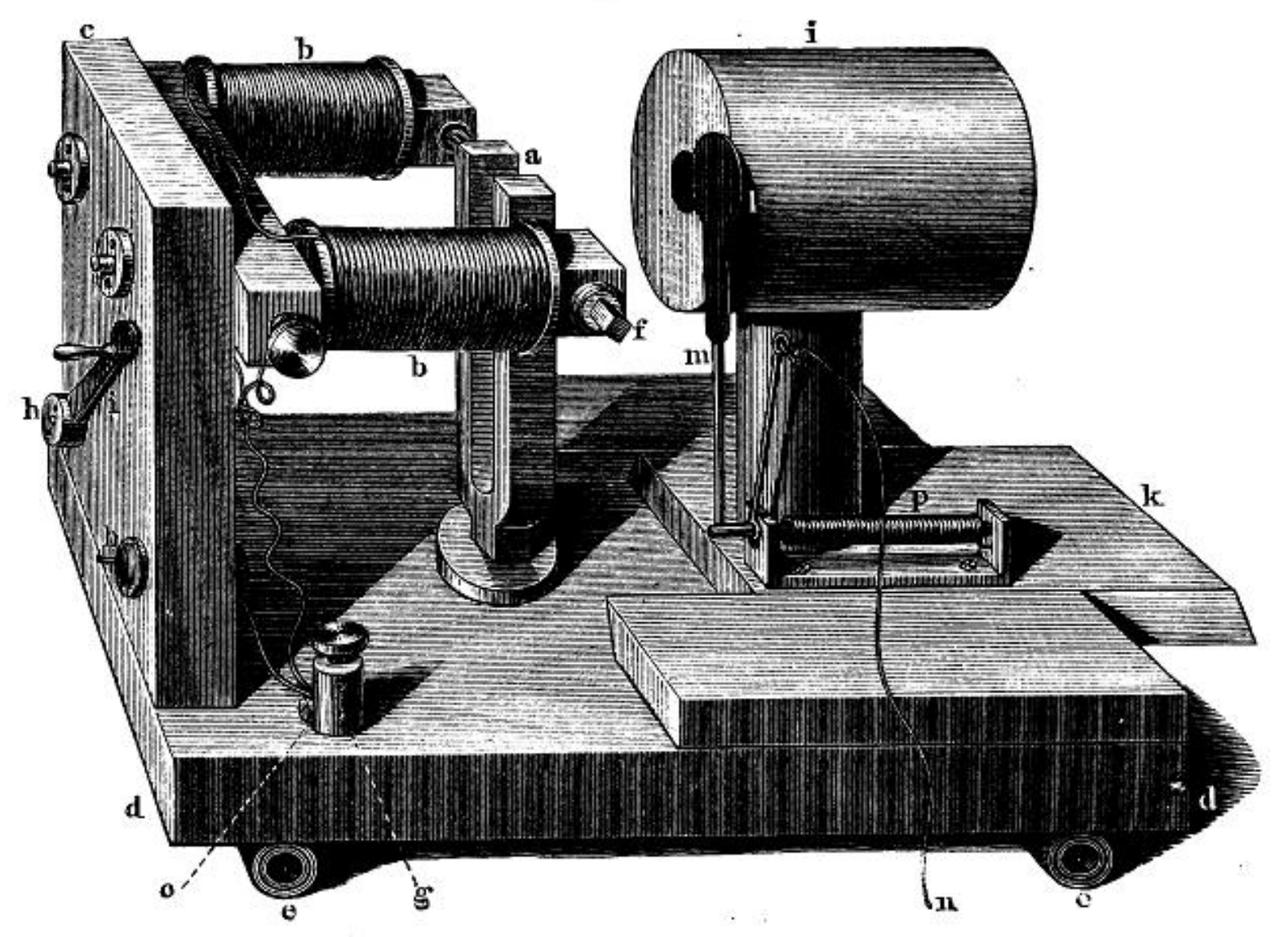

When the input signal exceeds the boundaries $-1$ and $1$, the signal does not clip but is folded back. This can be achieved by amplifying the input with an additional gain:

For a gain of $g=3$, the time-domain output signal looks as follows:

Spectrum for a Sinusoidal Input¶

The spectrum of wavefolding can be calculated by expressing the folding term as a Fourier series: The Jacobi–Anger expansion can be used for this purpose, with the pre-gain $g$:

$$ \sin(g \sin(x)) = 2 \sum\limits_{m=1}^{\infty} J_{2m-1}(g) \sin((2m-1)x) $$At this point it is already apparent that the resulting signal contains harmonics at odd integer multiples of the fundamental frequency $f_m = 100 \mathrm{Hz}\ (2 m -1)$. Their gain is determined by first kind Bessel functions $J_{2m-1}(g)$:

For the DFT this leads to:

$$ \begin{eqnarray} X[k] &=& 2 \sum\limits_{m=1}^{\infty} J_{2m-1}(g) \sin((2m-1)x) \sum\limits_{n=0}^{N-1} e^{-j 2 \pi k \frac{n}{N}} \\ % % % X[k] &=& 2 \sum\limits_{n=0}^{N-1} \sum\limits_{m=1}^{\infty} J_{2m-1}(g) \sin((2m-1)x)\ e^{-j 2 \pi k \frac{n}{N}} \\ % % % X[k] &=& 2 \sum\limits_{n=0}^{N-1} \sum\limits_{m=1}^{\infty} J_{2m-1}(g) \frac{1}{2} \left( e^{j (2m-1)x} - e^{-j(2m-1)x} \right) % \sin((2n-1)x)\ e^{-j 2 \pi k \frac{n}{N}} \\ % % % X[k] &=& \sum\limits_{n=0}^{N-1} \sum\limits_{m=1}^{\infty} J_{2m-1}(g) \left( e^{-j 2 \pi k \frac{n}{N} + j (2m-1)x } - e^{-j 2 \pi k \frac{n}{N} -j(2m-1)x} \right) % \sin((2n-1)x)\ \end{eqnarray} $$With $x = 2 \pi \frac{f_0}{f_s} n$

$$ X[k] = \sum\limits_{n=0}^{N-1} \sum\limits_{m=1}^{\infty} J_{2m-1}(g) \left( e^{-j 2 \pi k \frac{n}{N} + j (2m-1) 2 \pi \frac{f_0}{f_s} } - e^{-j 2 \pi k \frac{n}{N} -j(2m-1) 2 \pi \frac{f_0}{f_s}} \right) % \sin((2n-1)x)\ $$1 Hints on this by Peyam Tabrizian can be found here: https://youtu.be/C641y-z3aI0

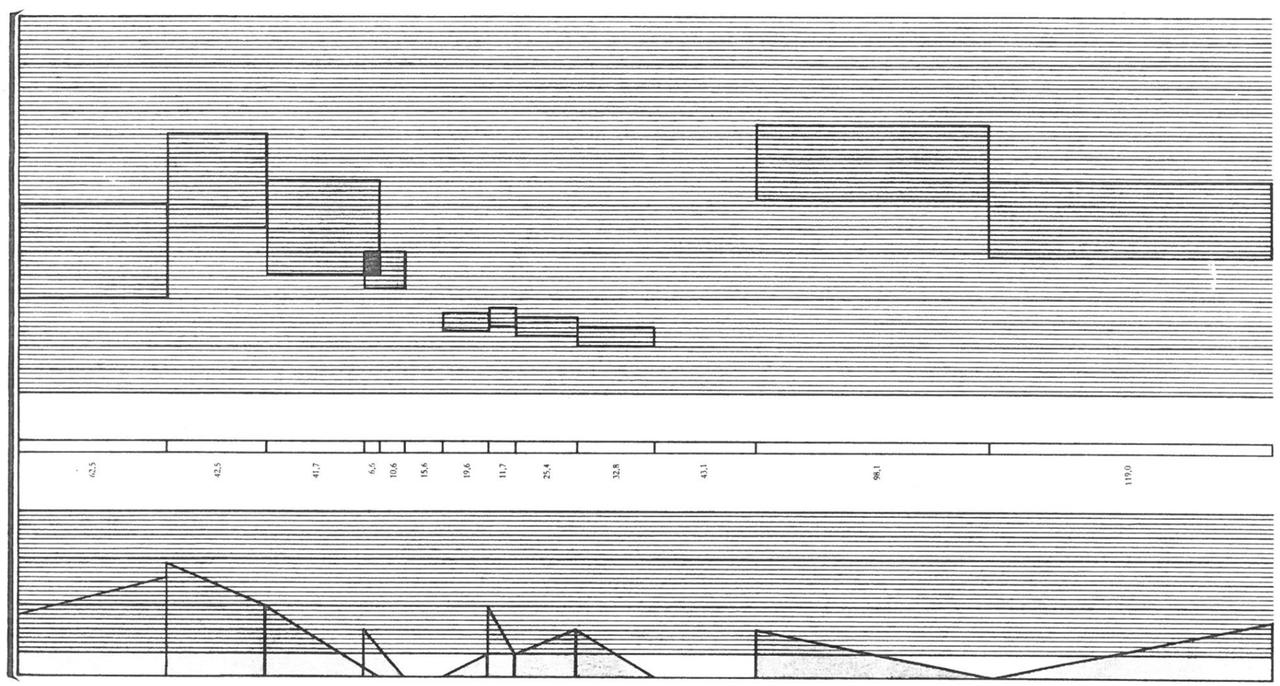

DFT Plots¶

The below plots show the spectra of the folding operation for a sine input of $100 \mathrm{Hz}$ at different gains. With increasing gain, partials are added at the odd integer multiples of the fundamental frequency $f_m = 100 \mathrm{Hz}\ (2 m -1)$: